Have you developed or are you in the process of creating an API Server that will be used on a production or cloud environment? In this 4th instalment of my Node JS Performance Optimizations series, I show you how to test the availability of your API Server, so that you can understand how many requests per second it can handle whilst performing heavy duty tasks.

This is a very important measure, especially for production environments, because the last thing you want is to have incoming requests queued as a result of your API Server peaking and not freeing up resources often enough.

A Quick Note

While I’ll be referencing NodeJS in this article, most of the theory and principles mentioned can apply to any platform and environment.

Watch The Video On YouTube

Which Benchmark Testing Tool To Use

The tool I’ll be using for my tests is called AutoCannon. It’s written entirely in NodeJS and is very similar to Apache Benchmark, Artillery, K6, Wrk, etc. This is good news because you are not forced to use AutoCannon to follow along with this article. If your benchmark testing tool can perform load tests against HTTP requests and can determine the average requests per second, you’re good to go.

That being said, should you wish to make use of AutoCannon, it’s as easy as installing it globally as an NPM Module:

npm i -g autocannon</span>

How To Test The Availability Of Your API Server

Firstly, there is an online Source Code Repo that you can reference should you wish to run these examples on your local environment. All you will need is NodeJS installed.

The snippet of code below gets you pretty much 99% to where you want to be, with the exception of setting up your package.json, setting the NODE_ENV to Production and PORT to 6000 (reference sample code in provided Source Code Repo and embedded video should you struggle). Add the following to an app.js file.

'use strict'

require('dotenv').config()

const Express = require('express')

const App = Express()

const HTTP = require('http')

const BCrypt = require('bcryptjs')

// Router Setup

App.get('/pulse', (req, res) => {

res.send('')

})

App.get('/stress', async (req, res) => {

const hash = await BCrypt.hash('this is a long password', 8)

res.send(hash)

})

// Server Setup

const port = process.env.PORT

const server = HTTP.createServer(App)

server.listen(port, () => {

console.log('NodeJS Performance Optimizations listening on: ', port)

})

This is a very simple Express Server that exposes 2 Routes:

- /pulse

- /stress

The /pulse endpoint represents a very lightweight API that contains no business logic and returns an empty string as a response. There should be no reason for any delays when processing this endpoint.

The /stress endpoint, on the other hand, uses BcryptJS to salt and hash a password. This is quite a heavy process and because it’s completely written in JavaScript, it will land up blocking the Event Loop quite badly.

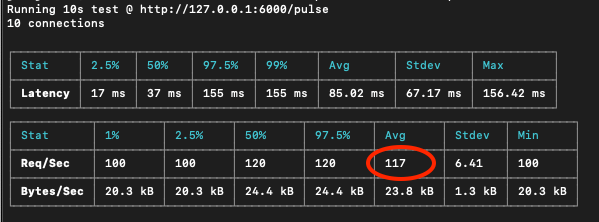

For our first test, we’re going to use AutoCannon to run a load test against the /pulse endpoint, to see how many requests per second the API Server can handle when it’s running idle. The process is as follows:

1. Start up the Express Server

node app

2. Run the AutoCannon test

autocannon http://127.0.0.1:6000/pulse- This is a simple load test that runs 10 concurrent connections for 10 seconds

After the test run, you should receive a report that includes an average requests per second. Depending on the speed of your machine, it should vary between 15 000 and 25 000 requests:

Now that we have our baseline measurement, let’s see what happens when the API Server is performing heavy duty tasks:

1. Make sure the Express server is running

node app

2. Open 2 Terminal windows for the tests to be performed

3. In window 1, run AutoCannon against the /stress endpoint for a duration of 30 seconds

autocannon -d 30 http://127.0.0.1:6000/stress

4. In window 2, while the /stress test is running, run AutoCannon against the /pulse endpoint, same as before

autocannon http://127.0.0.1:6000/pulse- Make sure the /pulse test runs to completion whilst the /stress test is running

After this test run, you should see a significant drop in the requests per second for the /pulse test.

As you can imagine, this is a frightening result…one that needs to be handled sooner rather than later.

What Does This Mean In The Real World

While this example won’t make too much sense in the real world, it forms a template for the kind of tests you should be running on your environment. You need to identify when your API Server is running at peak, and then hit it with load testing against lightweight APIs that belong to your server. You need to determine if they can be processed without much delay, or if they get blocked because your code might not be managing the Event Loop well enough.

How do I fix the problem?

Well, I have good news: As mentioned at the start, I am busy with a series on “Node JS Performance Optimizations”. Having already used examples from content I’ve published and content to come, I managed to increase the requests per second for the /pulse test from 117 to over 4 000.

What you want to do is subscribe to my Bleeding Code YouTube Channel, as I publish everything there first and almost on a weekly basis. There are already 4 videos for this series, an important one which is “Managing The Event Loop Phases“.

I hope this article proved valuable. Stay tuned for more to come 😎

Cheers

Originally published at bleedingcode.com on August 31, 2020.